Evaluating NLP for Named Entity Recognition, Part 1: Requirements and Test Data

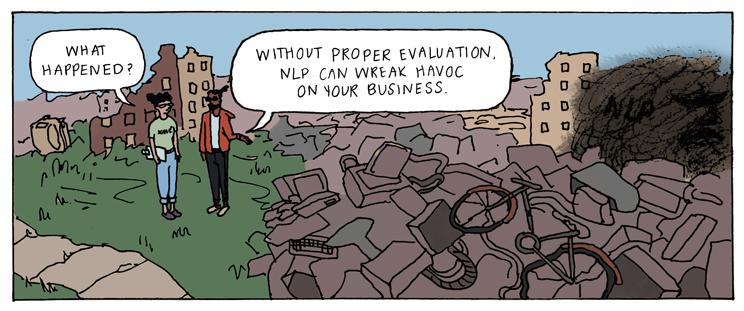

How do you know if a given natural language processing (NLP) package will do what you need? How can you evaluate it and justify your choice? This three-part blog post is your guide to taking the emotions out of evaluating NLP with steps for a disciplined evaluation of any NLP technology. It’s not as fast as “eyeballing” output, but the results will enable you to compare different NLP offerings and make a sound choice. Failing to do a proper evaluation can have serious repercussions on your downstream systems and embarrass your business. Plus, there is nothing like hard evidence when the CFO asks about your NLP expenditures.

We will call out “extra credit” steps for a more rigorous evaluation. Investing more time and effort will produce results you can take to the bank.

For ease of explanation, we’ll take you through the six steps to a disciplined evaluation by using the specific example of named entity recognition (NER), which extracts things like people, locations, and organizations, to:

- Define your requirements

- Assemble a valid test dataset

- Annotate the gold standard test dataset

- Get output from vendors

- Score the results

- Make your decision.

Define your requirements

Are you using entity extraction to create metadata around names of people, locations, and organizations for a content management system? Are you hunting through millions of news articles daily in search of negative news about a given list of people and companies for due diligence? Or, are you sifting through social media posts to find names of products and companies, and then looking at sentiment around those entities to measure the effectiveness of an ad campaign?

Ask yourself these questions:

- What types of data will you analyze? Do you have sufficient data samples that are both “representative” and “balanced” (see definitions below)?

- What are your requirements for accuracy and speed?

- Do you know how to evaluate what constitutes successful NER in your downstream processing? Feeding “perfect” hand-annotated data into your system, have you successfully produced the end results you were expecting?

Assemble a valid test dataset

This step is about compiling documents that will be fed into each candidate system. Just as an effective math test has to include content that really tests the knowledge of the pupils, test data (called a test corpus) should do the same by being representative and balanced.

- Representative means the documents should cover the type of text you want the NER system to process. That is:domain vocabulary — financial, scientific, and legalformat — electronic medical records, email, and patent applicationsgenre — novel, sports news, and tweets.Using a sample of the actual data that the system will process is the best. However, if your target input is medical records and confidentiality rules prevent using real records, dummied up medical records containing a similar frequency and mixture of terms and entity mentions will suffice.

- domain vocabulary — financial, scientific, and legal

- format — electronic medical records, email, and patent applications

- genre — novel, sports news, and tweets.Using a sample of the actual data that the system will process is the best. However, if your target input is medical records and confidentiality rules prevent using real records, dummied up medical records containing a similar frequency and mixture of terms and entity mentions will suffice.

- Balanced means the documents in the test dataset should collectively contain at least 100 instances of each entity type you wish the system to be able to extract. Let’s suppose corporate entities (e.g., "Ipso Cola,” “Queen Guinevere Flour Company”) are the most important entity type for you to extract. You cannot judge the usefulness of the system if there are not enough corporate entities in the test data.

Extra credit: Your evaluation will be more accurate and reliable with more than 100 mentions of the entities that matter most to you, although this goal may not be possible for entity types that are sparse. The ideal number is 1,000 or more mentions each of people, locations, and organizations, if you can manage it.

This test corpus is the foundation for the validity of all your testing. If you can’t get actual input data, it is worth the time and effort to create a corpus that resembles the real data as closely as possible.

In short, before charging an engineer to collect even one file, make sure you understand your technical and business requirements and how one affects the other, to guide you in evaluating and choosing an NLP technology.

Your requirements will heavily influence the guidelines for annotation and your final choice.